re:Capping re:Invent: AWS goes all-in on Serverless

6 days in Vegas at AWS re:Invent made it clear that they're betting big on serverless and innovating at an incredible pace. Here's what you need to know.

Last week I spent six incredibly exhausting days in Las Vegas at the AWS re:Invent conference. More than 50,000 developers, partners, customers, and cloud enthusiasts came together to experience this annual event that continues to grow year after year. This was my first time attending, and while I wasn't quite sure what to expect, I left with not just the feeling that I got my money's worth, but that AWS is doing everything in their power to help customers like me succeed.

There have already been some really good wrap-up posts about the event. Take a look at James Beswick's What I learned from AWS re:Invent 2018, Paul Swail's What new use cases do the re:Invent 2018 serverless announcements open up?, and All the Serverless announcements at re:Invent 2018 from the Serverless, Inc. blog. There's a lot of good analysis in these posts, so rather than simply rehash everything, I figured I touch on a few of the announcements that I think really matter. We'll get to that in a minute, but first I want to point out a few things about Amazon Web Services that I learned this past week.

AWS knows how to throw a party 🎉

No, I'm not talking about re:Play or Midnight Madness (although those were pretty amazing), I'm talking about how incredibly well-organized this six day conference was. There were five main venues that hosted sessions, chalk talks, workshops, and more. There was breakfast, lunch, snacks, and a seemingly unlimited amount of alcohol. There were certification exams, games, music, two expo centers, and hundreds of support staff that made sure that everything ran smoothly. There was transportation to get you around the campus, security to keep the attendees safe, and a sense of community that made you feel welcome wherever you went. This was unlike any other conference I had ever been to.

AWS employees are as excited about the possibilities as you are

I was fortunate enough to chat with several AWS employees about a wide range of topics. Not only were they extremely knowledgable, but they were also incredibly passionate about the technologies they were working on. As we discussed things such as serverless microservice patterns, or use cases for DynamoDB streams, they lit up like kids in a candy store. Werner mentioned in his keynote that 95% of AWS features and services are built based on direct customer feedback. Having had so many of these conversations, there is little doubt in my mind that AWS's customer-first approach, in combination with their highly-engaged team members, is at the core of its success.

Major Serverless Announcements

There were some pretty big announcements at re:Invent this year, but Thursday's keynote by Werner Vogels laid out AWS's vision for the future of computing, and guess what? It's not containers. After talking about some of the amazing innovations AWS has made with cloud scale databases, he outlined several new features that support and enhance the AWS serverless ecosystem. The bottom line: Everyone wants to focus on business logic.

As you can see from the picture below, many large enterprises are starting to go serverless. The benefits have been clear for quite some time, but the implementation has been difficult given the relative immaturity of the tooling, supporting services, and best practices. This re:Invent took aim at the naysayers and released several targeted features to overcome the remaining objections to serverless.

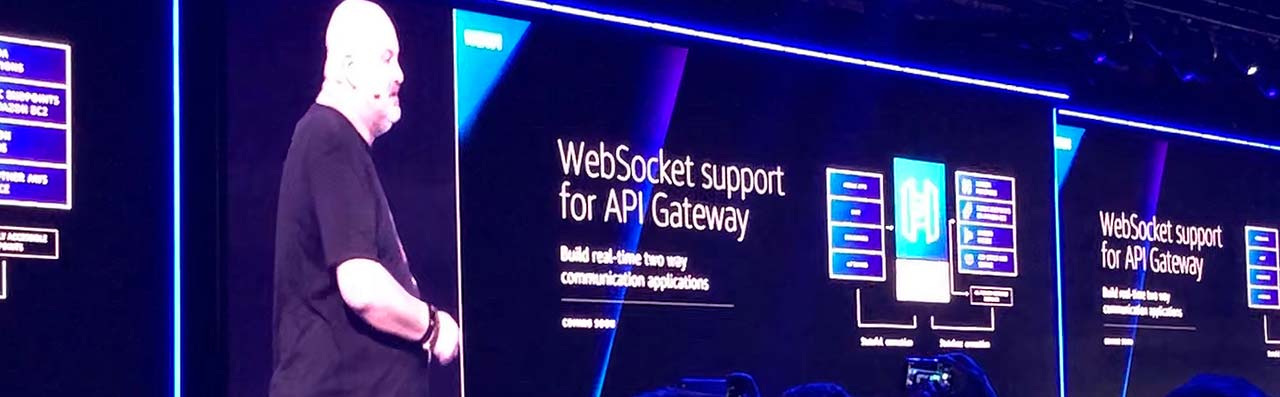

API Gateway adds WebSocket support

Modern web and mobile applications are becoming more and more complex. Single Page Applications (SPAs), web workers, bots, etc. are all demanding two-way communication with clients to provide highly interactive and real-time interfaces. Though there were some hacks to make this work, this has been one of the major limitations of serverless. This announcement changes everything.

The WebSocket protocol has become the standard for modern browsers, and adding this to API Gateway will convert it to an easy-to-scale, event-based system. This feature will be available soon, but you might have also missed that Amazon CloudFront announced support for the WebSocket protocol two weeks ago.

Bring your own runtime to AWS Lambda (plus native Ruby support)

AWS Lambda natively supports several programming languages, but there are still plenty of popular languages that it doesn't. Adding Ruby native support obviously opens up possibilities for a whole new community of programmers, but the ability for custom runtimes takes this to a whole new level. Packaging your own runtime is probably a bad idea (do you really want to manage that yourself?), but there are a lot of frameworks out there that could utilize this to modernize applications.

Laravel and Symfony come to mind here. Two very popular php frameworks that require both php, and a number of components to bootstrap the framework. Maintaining custom runtimes that allow developers to run code on top of these popular frameworks could be an incredibly powerful use case for php developers. There might be some refactoring involved, but the relatively modular components could be the perfect building blocks.

Read: New for AWS Lambda – Use Any Programming Language and Share Common Components and Announcing Ruby Support for AWS Lambda

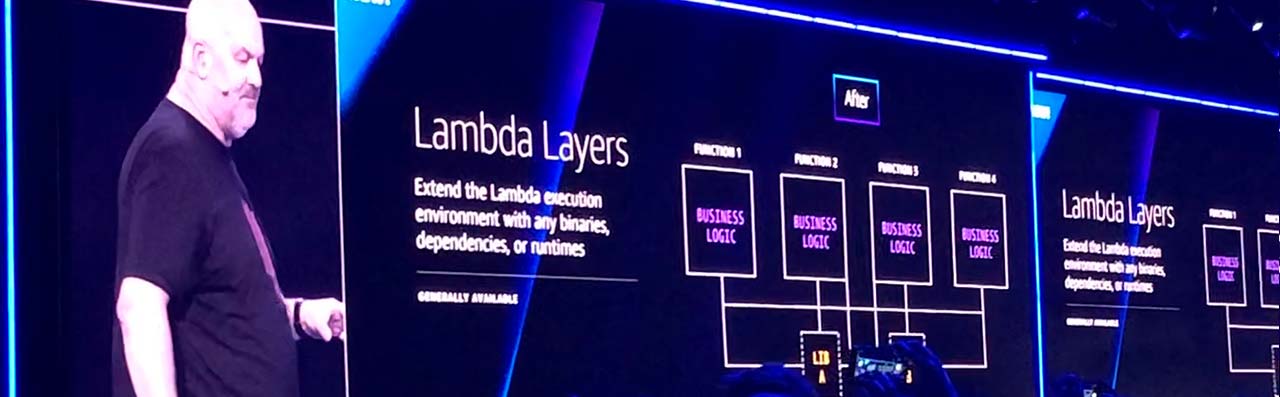

AWS Lambda Layers

Lambda Layers is another extremely powerful feature that will change the way we build and think about serverless applications. Right now, we need to include all shared logic, configuration, and instrumentation in every function. This is relatively easy to manage on a smaller level, but as applications begin to grow, it gets more and more difficult to manage and enforce dependencies.

Using Lambda Layers, developers can package common functionality such as security and monitoring plugins and then layer that onto every function. You can also include difficult to package binaries, making it easier for developers to make use of more advanced functionality. If done correctly, this will help enforce compliance and create smarter, more useful serverless applications.

Read: New for AWS Lambda – Use Any Programming Language and Share Common Components

Step Functions service integrations

Step Functions are state machines that allow you to orchestrate workflows within your serverless applications. These can be extremely useful when managing longer running tasks, executing tasks in parallel, and handling error retries. But as powerful as this feature was, you were still using Lambda functions to handle integrations with other managed services.

You can now integrate Step Functions directly with eight additional services such as SNS, SQS, DynamoDB and AWS Batch. This means writing even less code and handing more of the control logic over to AWS. In addition, this means that common workflows will be even less expensive, requiring fewer transitions and Lambda invocations to get the same work done.

Read: AWS Step Functions Adds Eight More Service Integrations

ALBs now support Lambda as a target

I feel like this announcement may be the most important of them all. Most companies are running monolithic applications. And those companies are almost certainly running their applications behind an Application Load Balancer. It is entirely possible to use API Gateway to apply the Strangler pattern to your existing applications, but that means routing ALL of your traffic through API Gateway.

There are three major problems with that approach:

- API Gateway will become cost prohibitive very quickly

- You are adding another layer of latency to your application

- You have to shift your application traffic to a new endpoint

Being able to execute a Lambda function with an HTTP/S call directly from your load balancer completely eliminates the aforementioned problems. This means that organizations can start strangling parts of their application and handing them off directly to serverless components with zero impact on other systems. This lowers the bar (and significantly mitigates the risks) for companies looking to adopt serverless in stages. 👍

Read: Application Load Balancer can now Invoke Lambda Functions to Serve HTTP(S) Requests and Lambda functions as targets for Application Load Balancers

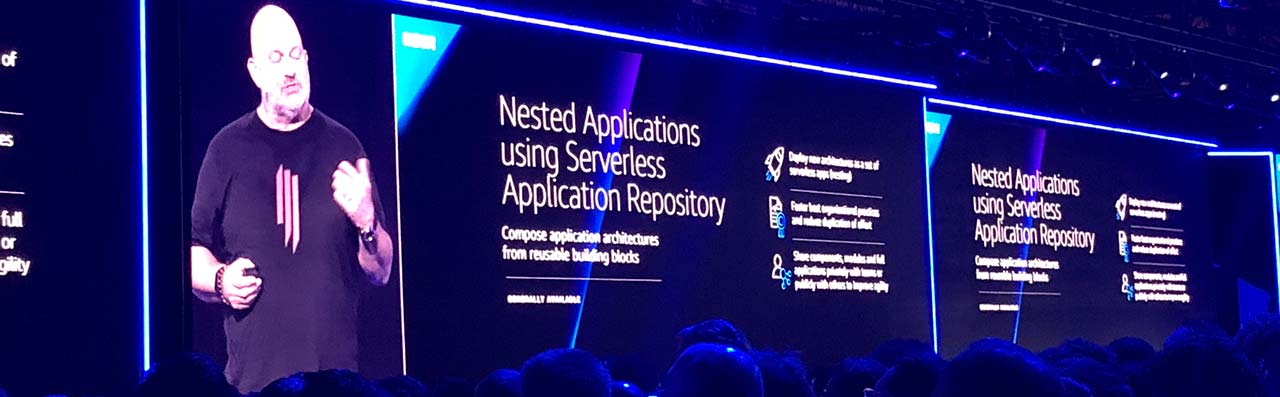

Nested Apps using Serverless Application Repository

The Serverless Application Repository is loaded with prebuilt components that allow you to easily deploy them to your own Lambda function. While this has been a useful playground, up until now, it wasn't really integrated into any type of development workflow. This new announcement allows developers to build public (or private) components that can be composed as CloudFormation nested stacks.

This means that entire applications can be stitched together by simply referencing the ARN and version number of an application in the SAR and then passing in some parameters. This is another powerful addition to our workflows since we can dramatically reduce the need to create and manage redundant code. See this example app being nested by this sample to see how it works. Also, it uses SemVer, so you could easily share apps across your organization without worrying about breaking changes.

Amazon DynamoDB On-Demand

DynamoDB got a couple of upgrades this past week as well. There was the announcement about DynamoDB now supporting transactions, which is a pretty big deal. However, I'm really interested in the new On-Demand capacity mode. In the past, you would need to provision your capacity and then enable autoscaling in order to handle spikes in traffic. While this was a very useful feature, scaling operations often created periods of unused capacity, resulting in additional charges to your account.

With On-Demand capacity, you effectively scale down to zero if your table is not being actively read from or written to. This means you only pay for the number of request units you use. If you have highly predictable workloads, then this isn't for you. However, if you get occasional spikes in traffic, or the table is infrequently used, this will save you a lot of money.

Read: Amazon DynamoDB On-Demand – No Capacity Planning and Pay-Per-Request Pricing

Final Thoughts 🤔

AWS re:Invent was an amazing experience, and I only wish I had been able to attend some of the previous ones. The amount of effort that AWS puts into this event is truly amazing, and other than getting a terrible seat at Werner's keynote, I have very few complaints. The announcements just kept on coming one after another, and it's clear to me that AWS's incredible pace of innovation isn't slowing down.

Their advancements in serverless architectures is lightyears ahead of most of their competitors at this point. AWS has put every other cloud provider on notice: either listen to your customers and continue to innovate, or get left behind.