Lambda Warmer: Optimize AWS Lambda Function Cold Starts

Annoyed by AWS Lambda cold starts? The Lambda Warmer Node.js module will help you mitigate these issues, including the ability to warm concurrent functions.

At a recent AWS Startup Day event in Boston, MA, Chris Munns, the Senior Developer Advocate for Serverless at AWS, discussed Lambda cold starts and how to mitigate them. According to Chris (although he acknowledge that it is a "hack") using the CloudWatch Events "ping" method is really the only way to do it right now. He gave a number of really good tips to pre-warm your functions "correctly":

- Don't ping more often than every 5 minutes

- Invoke the function directly (i.e. don't use API Gateway to invoke it)

- Pass in a test payload that can be identified as such

- Create handler logic that replies accordingly without running the whole function

He also addressed how to keep several concurrent functions warm. You need to invoke the same function multiple times, each with a delayed execution. This prevents the system from reusing the same container.

💡 There were a lot of great insights in his presentation. I've summarized them for you in my post: 15 Key Takeaways from the Serverless Talk at AWS Startup Day. I highly suggest you read this to learn some serverless best practices directly from the AWS team.

I deal with cold starts quite a bit, especially when using API Gateway. So following these "best practices", I created Lambda Warmer. It's a lightweight Node.js module that can be added to your AWS Lambda functions to manage "warming" ping events. It also handles automatic fan-out for warming concurrent functions. Just instrument your code and schedule a "ping".

Here is an example usage. You simply require the lambda-warmer package and then use it to inspect the event. And that's it! Lambda Warmer will either recognize that this is a "warming" event, scale out if need be, and then short-circuit your function - OR - it will detect that this is an actual request and pass execution off to your main logic.

javascriptconst warmer = require('lambda-warmer') exports.handler = async (event) => { // if a warming event if (await warmer(event)) return 'warmed' // else proceed with handler logic return 'Hello from Lambda' }

💡 Lambda Warmer returns a resolved promise, so you can use it with promise chains too. 😉

Why should I use Lambda Warmer? 🤔

It's important to note that you DO NOT HAVE TO WARM all your Lambda functions. First of all, according to Chris, cold starts account for less than 0.2% of all function invocations. That is an incredibly small percentage that will only affect a tiny number of invocations. Secondly, unless cold starts are causing noticeable latency issues with synchronous invocations, there probably isn't a need to warm them.

For example, if you have functions responding to asynchronous or streaming events, no one is going to notice if there is a few hundred millisecond start up delay 0.2% of the time. There probably isn't any reason to warm these functions. If, however, your functions are responding to synchronous API Gateway requests and users periodically experience 5 to 10 seconds of latency, then Lambda Warmer might makes sense.

So, if you have to pre-warm your functions, why would you choose Lambda Warmer? First of all, it's open source. I built Lambda Warmer to solve my own problem and shared it with the community so that others can benefit from it. Pull Requests, suggestions and feed back are always welcome. Secondly, it is super lightweight with only one dependency (Bluebird for managing delays). Dependency injection scares me, and given the string of recent NPM hacks, minimizing dependencies is becoming a best practice.

And then there's this:

As the old saying goes, "If it's good enough for Chris Munns, it's good enough for me!" 😂

But seriously, my goal was to follow best practices and make it easily shareable with others. If this helps a few more people adopt serverless or makes their apps a bit more responsive, awesome. 🙌

How does Lambda Warmer work? 👷♂️

The code for Lambda Warmer is available on Github and is MIT licensed. Feel free to contribute or fork it and create your own implementation.

There are a number of configuration options, all explained in the documentation, so I won't repeat them here. I do want to outline the basics since the default implementation will probably be sufficient for most use cases.

❗️IMPORTANT NOTE: Lambda Warmer facilitates the warming of your functions by analyzing invocation events and appropriately managing handler processing. It DOES NOT create or manage CloudWatch Rules or other services that periodically invoke your functions.

You can create a rule manually, using a SAM template, or using the Serverless framework. You could also write another Lambda function that periodically invoked functions (maybe even with some smarter rules). Regardless of how you automate the "ping" of your functions, the two most important things are:

- Non-VPC functions are kept warm for approximately 5 minutes whereas VPC-based functions are kept warm for 15 minutes. Set your schedule for invocations accordingly. There is no need to ping your functions more often than the minimum warm time. AND

- Functions must be invoked with a JSON object like this:

{ "warmer":true,"concurrency":3 }. Thewarmerandconcurrencyfield names can be changed using the configuration options.

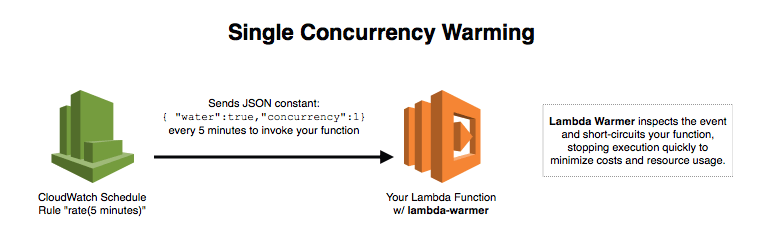

When your function is pinged with a concurrency of 1, the process is quite simple:

Lambda Warmer inspects the event, determines it's a "warming" event, and then short-circuits your code without executing the rest of your handler logic. This saves you money by minimizing the execution time. If the function isn't warm, it may take a few hundred milliseconds to start up. But if it is warm, it should be less than 100ms.

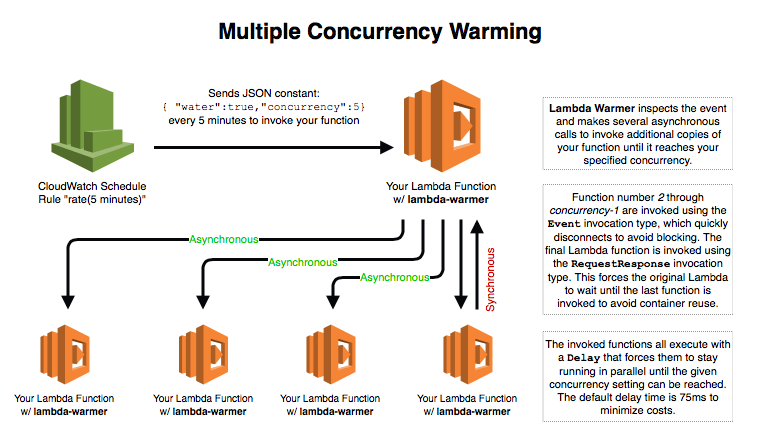

If you are warming your function with a concurrency great than 1, then Lambda Warmer handles that for you as well:

As with single concurrency warming events, Lambda Warmer inspects the event to determine if it is a "warming" invocation. If it is, Lambda Warmer attempts to invoke copies of the function to simulate concurrent load. It does this by using the AWS-SDK, this means that your function needs the lambda:InvokeFunction permission. The invocations are asynchronous and use the Event type to avoid blocking. Each invoked function executes with a Delay that keeps that function busy while others are starting. The last invocation uses the RequestResponse type so that the initial function doesn't end before the final invocation is made. This prevents the system from reusing any of the running containers.

Lambda Warmer is invoking copies of the same function by sending it a similar "warming" event, which is handled appropriately for you.

What's the purpose of logging "warming" events? 📊

Logs are automatically generated unless the log configuration option is set to false. I personally like the logs because they contain useful information beyond just invocation data. The warm field indicates whether or not the Lambda function was already warm when invoked. The lastAccessed field is the timestamp (in milliseconds) of the last time the function was accessed by a non-warming event. Similarly, the lastAccessedSeconds gives you a counter (in seconds) of how long it's been since it has been accessed.

You can use these to create metric filters in CloudWatch, which could give you some really interesting stats. Armed with this information, you can determine things like whether your concurrency needs to be lowered (or raised).

Here is a sample log:

javascript{ action: 'warmer', // identifier function: 'my-test-function', // function name id: '1531413096993-0568', // unique function instance id correlationId: '1531413096993-0568', // correlation id count: 1, // function number of total concurrent e.g. 3 of 10 concurrency: 2, // number of concurrent functions being invoked warm: true, // was this function already warm lastAccessed: 1531413096995, // timestamp (in ms) of last non-warming access lastAccessedSeconds: '25.6' // time since last non-warming access }

Warm and fuzzy Lambda functions

I hope you find this module useful and that it helps you overcome (or at least lessen) this little annoyance as much as possible. I said in a previous post about cold starts:

I'm excited to find new and creative ways that serverless infrastructures can solve real-world problems. As soon as the cold start issue is fully addressed, the possibilities become endless.

I still believe this is true and that serverless is the future. It was also nice to finally meet Chris Munns in person and hear his thoughts on the issue. He relayed that the AWS Lambda team is well aware of the cold start issue and is working diligently to address it. This certainly makes me feel better to know that it is a priority for them.

But even with this outstanding issue, we can still feel warm and fuzzy about the future of serverless and do what we can today by following best practices to keep our Lambdas warm and fuzzy too. ⚡️❤️🙌