The Dynamic Composer (an AWS serverless pattern)

A serverless microservice pattern for dynamically composing AWS Lambda functions with similar guarantees as Step Functions.

I'm a big fan of following the Single Responsibility Principle when creating Lambda functions in my serverless applications. The idea of each function doing "one thing well" allows you to easily separate discrete pieces of business logic into reusable components. In addition, the Lambda concurrency model, along with the ability to add fine-grained IAM permissions per function, gives you a tremendous amount of control over the security, scalability, and cost of each part of your application.

However, there are several drawbacks with this approach that often attract criticism. These include things like increased complexity, higher likelihood of cold starts, separation of log files, and the inability to easily compose functions. I think there is merit to these criticisms, but I have personally found the benefits to far outweigh any of the negatives. A little bit of googling should help you find ways to mitigate many of these concerns, but I want to focus on the one that seems to trip most people up: function composition.

The need for function composition

At my current startup, we have several Lambda functions that handle very specific pieces of functionality as part of our article processing pipeline. We have a function that crawls a web page and then extracts and parses the content. We have a function that runs text comparisons and string similarity against existing articles to detect duplicates, syndicated articles, and story updates. We have one that runs content through a natural language processing engine (NLP) to extract entities, keywords, sentiment, and more. We have a function that performs inference and applies tagging and scores to each article. Plus many, many, many more.

In most cases, the data needs to flow through all of these functions in order for it to be usable in our system. It's also imperative that the data be processed in the correct order, otherwise some functions might not have the data they need to perform their specific task. That means we need to "orchestrate" these functions to ensure that each step completes successfully.

If you're familiar with the AWS ecosystem, you're probably thinking, "why not just use Step Functions?" I love Step Functions, and we initially started going down that path. But then we realized a few things that started making us question that decision.

First, we were composing a lot of steps, so the number of possible transitions required for each article (including failure states) became a bit unwieldy and possibly cost prohibitive. Second, the success guarantee requirement for this process is very low. Failing to process an article every now and then is not a make or break situation, and since most of our functions provide data enrichment features, there is little to no need for issuing rollbacks. And finally, while the most utilized workflow is complete end-to-end article processing, this isn't always the case. Sometimes we need to execute just a portion of the workflow, like rerunning the NLP or the tagger. Sometimes we'll even run part of the workflow for debugging purposes, so having a lot of control is really important.

Could we have built a whole bunch of Step Functions to handle all of these workflows? I'm sure we could have. But after some research and experimentation, we found a pattern that was simple, composable, flexible, and still had all the retry and error handling guarantees that we would likely have used with Step Functions. I call it, the Dynamic Composer.

The Dynamic Composer Pattern

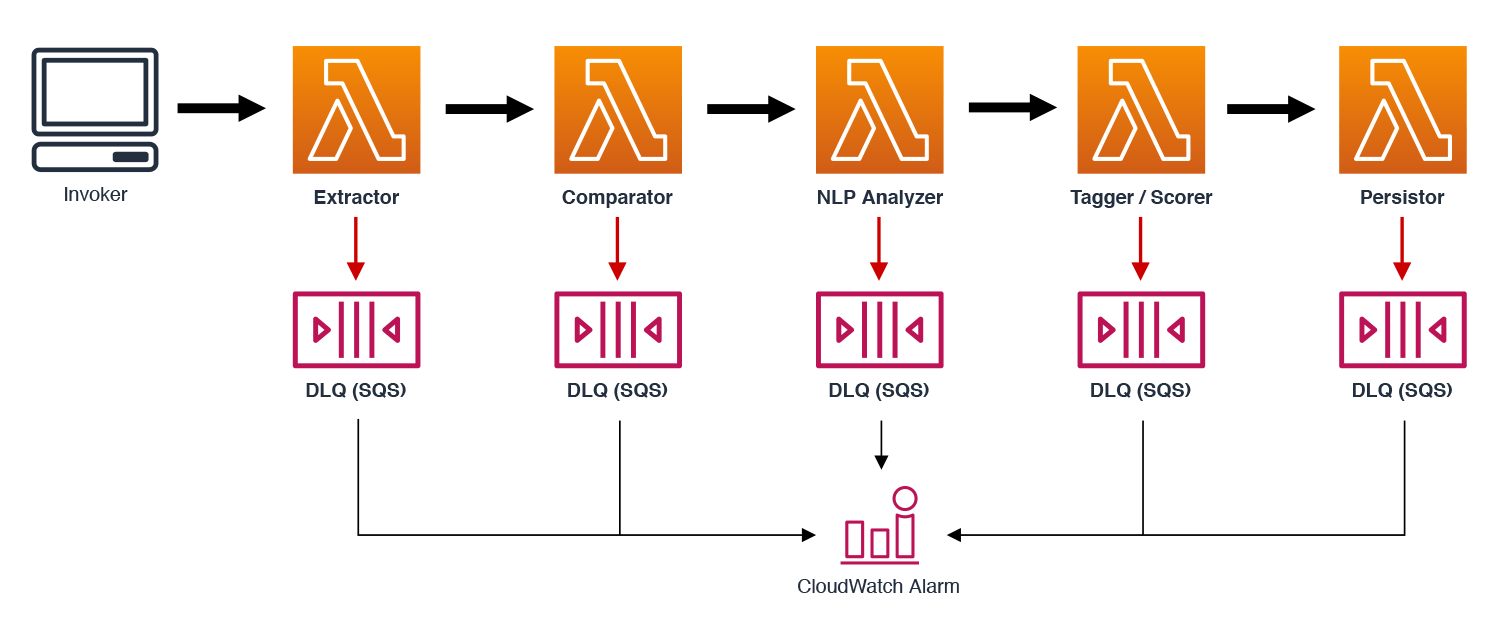

The key to this pattern is the utilization of ASYNCHRONOUS function invocations (yes, Lambdas calling Lambdas). Below is a simple digram that shows the composition of five functions (Extractor, Comparator, NLP Analyzer, Tagger, and Persistor). This is only a small subset of our process for illustration purposes. This pattern can be used to compose as many functions as necessary.

Each of the thick black arrows represents an asynchronous call to the next Lambda function in the workflow. Each Lambda function has an SQS queue for its Dead Letter Queue (DLQ), and each DLQ is attached to a CloudWatch Alarm to issue an alert if the queue count is greater than one. Now for the "dynamic" part.

When we invoke the first function in our workflow (this can be from another function, API Gateway, directly from a client, etc.), we pass an array of function names with our event. For example, to kick off the full workload above, our payload for our extractor function might look like this:

javascript{ "url":"https://www.cnn.com/some-article-to-process/index.html", "_compose": ["comparator","nlp-analyzer","tagger","persistor"] }

The _compose array contains the order of composition, and can be dynamically generated based on your invocation. If you only wanted to run the tagger and the persistor, you would adjust your _compose array as necessary.

In order for our functions to properly handle the _compose array, we need to include a small library script in each of our functions. Below is a sample composer.js script that accepts the event from the function, as well as the payload that it should return or pass to the next function. If there are no more functions in the composition list (or it doesn't exist at all), then the payload is returned.

javascriptconst AWS = require('aws-sdk') const LAMBDA = new AWS.Lambda() module.exports = async (event,data) => { if (Array.isArray(event._compose) && event._compose.length > 0) { // grab the next function let fn = event._compose.shift() // ensure the _compose list is passed let payload = Object.assign(data,{ _compose: event._compose }) console.log(`Invoking ${fn}`) let result = await LAMBDA.invoke({ FunctionName: fn, InvocationType: 'Event', LogType: 'None', Payload: Buffer.from(JSON.stringify(payload)) }).promise() return { status: result.StatusCode } } else { return data } }

In order to use this within your functions, you would include it like this:

javascriptconst composer = require('./composer') exports.extractor = async event => { // Do some fancy processing... // Then merge the event to the function result let result = Object.assign({ article: { title: 'This an article title', created: new Date().toISOString() } },event) // pass the original event and function result to the composer return composer(event,result) }

Yes, we have to add a bit of helper code to our functions, but we still have the ability to call each function synchronously, and our composer.js script will just return the function output.

Wouldn't Step Functions be more reliable?

That entirely depends on the use case and whether or not you need to add things like rollbacks and parallelism, or have more control over the retry logic. For us, this pattern works really well and gives us all kinds of amazing guarantees. For example:

Automatic Retry Handling

Because we are invoking the next function asynchronously, the Lambda service will automatically retry the event twice for us. The first retry is typically within a minute, and the second is after about two minutes.

Error Handling

There are two ways in which this pattern will automatically handle errors for us. The first is if the LAMBDA.invoke() call fails at the end. This is highly unlikely, but since we allow this error to bubble up to the Lambda function, a failed invocation will FAIL THIS FUNCTION, causing it to retry as mentioned above. If it fails three times, it will be saved in our DLQ. The other scenario is when the Lambda service accepts the invocation, but then that function fails. Just like with the first scenario, it will be retried twice, and then moved to the DLQ.

Durability and Replay

Since any failed event will be moved to the appropriate DLQ, that event will be available for inspection and replay for up to 14 days. Our CloudWatch Alerts will notify us if there is an item in one of these queues, and we can easily replay the event without losing the information about the workflow (since it is part of the event JSON). If there was a particular use case that needed to be automatically replayed, an extra Lambda function polling the DLQs could handle that for you.

Automatic Throttling

There are a lot of great throttling control use cases for SQS to Lambda, but many people don't realize that asynchronous Lambda invocations give you built in throttling management for free! If you invoke a Lambda function asynchronously and the current reserved concurrency is exceeded, the Lambda service will retry for up to six hours before eventually moving the event to your DLQ.

Should you use this pattern?

I have to reiterate that Step Functions are amazing, and that for complex workflows that require rollbacks, or very specific retry policies, they are absolutely the way to go. However, if your workflows are fairly simple, meaning that it is mostly passing the results of one function to the next, and if you want to have the flexibility to alter your workflows on the fly, then this may work really well for you.

Your functions shouldn't require any sort of significant rewrites to implement this. Just plug in your own version of the composer.js script and change the return. You could even build a Lambda Layer that wraps the functions and automatically adds the composer for you. The functions themselves will still be independently callable and can still be included in other Step Functions workflows. But you always have the ability to compose them together by simply passing an _compose array.

And there's one more very cool thing (well, at least I think it's cool)! Even though this sort of violates the Single Responsibility Principle that I mentioned at the beginning, we couldn't help adding some "composition manipulation" within our functions. For example, if our comparator function detects that an article is a duplicate, then we will alter the _compose array to remove parts of the workflow. This adds a bit of extra logic to our functions, but it gives us some really handy controls over the workflow. Of course, this logic is conditioned on whether or not _compose exists, so we can still call the functions independently.

So, should you use this pattern? Well, we use it to process thousands of articles a day and it works like a charm for us. If you have a workflow that this might work for, I'd love to hear about.

Hope you find this useful. Happy serverlessing! 😉